A new iteration of HTTP, the protocol that powers the World Wide Web, is now with us. Designated HTTP/3, it’s been in development since 2018 and is currently in the Internet Draft stage of the Standards Track. There are already browsers that are making use of it, some unofficially, some officially but off by default (Chrome/Firefox).

Since its standardization as HTTP/1.1 in 1997, HTTP has been the dominant application layer protocol. Over the years, HTTP has had to be upgraded significantly to keep pace with the growth of the internet and the sheer variety of content exchanged over the web.

This article is a deep dive into HTTP/3. It presents the evolution of the HTTP protocol with a focus on HTTP/3’s features, why it is needed, and lastly giving a peek into the future of an Internet powered by HTTP/3.

Background – Why do we need HTTP/3?

HTTP spawns the Internet

When Tim Berners-Lee conceived of the Internet, the Hypertext Transfer Protocol (HTTP) was a “one-line protocol”. HTTP/0.9 consisted of ASCII text string requests: the GET method, followed by the document address, an optional port address, terminated with a carriage return (CR) and line feed (LF). A request was made up of a string of ASCII characters. Only HTML files could be transmitted and there were no HTTP headers. It didn’t have status or error codes either.

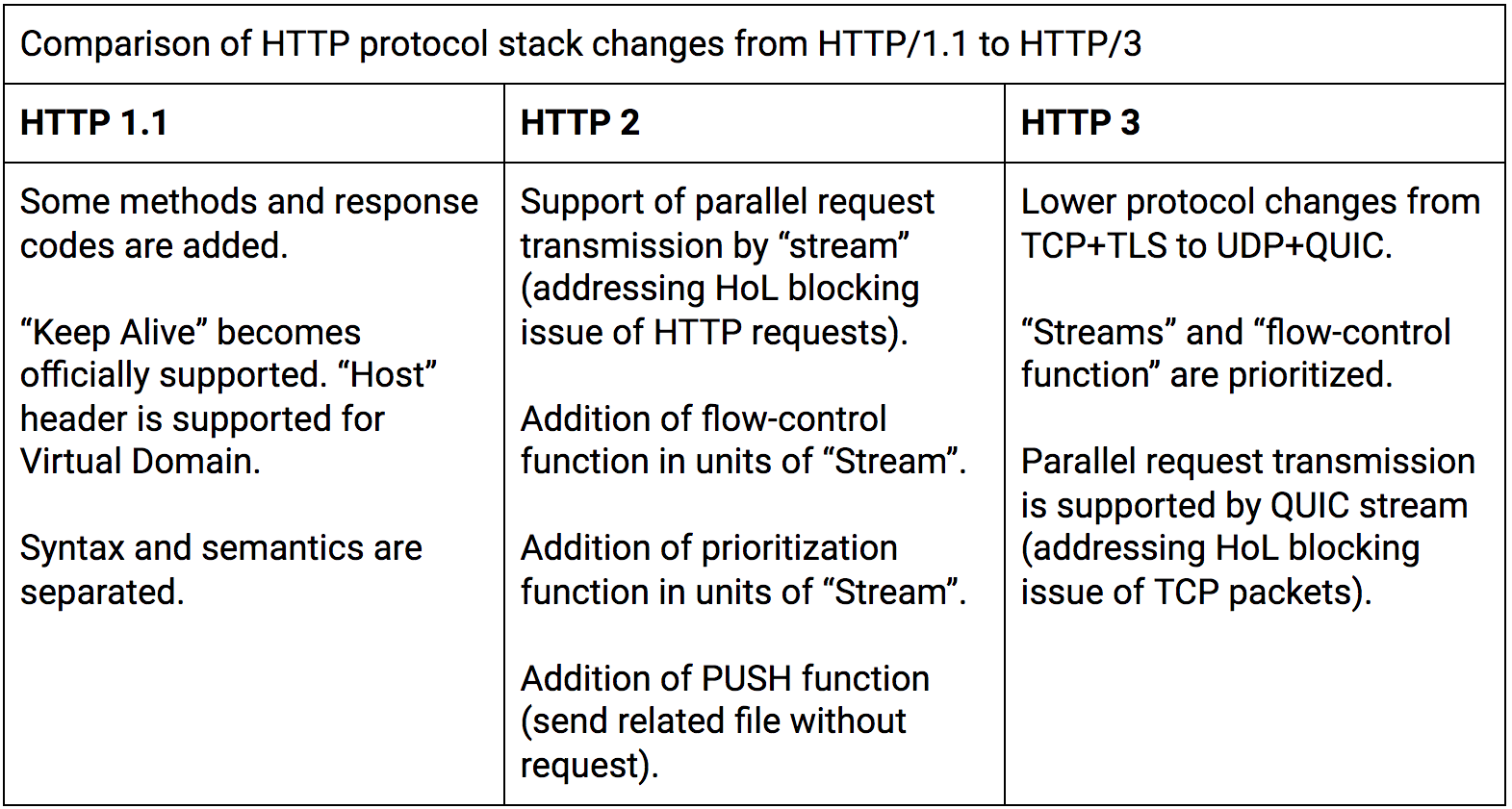

Over the years, HTTP has evolved from HTTP/1.0 to HTTP/1.1 and then to HTTP/2. At every iteration, new features have been added to the protocol to handle the needs of the time, such as application layer requirements, security considerations, session handling, and media types. For an in-depth look at HTTP/2 and its evolution from HTTP/1.0, take a look at the section From humble origins – A brief history of HTTP in our topic HTTP evolution – HTTP-2 Deep Dive. For an in-depth comparison, check out our HTTP/2 vs HTTP/3 post.

Comparison table of what each version introduced over the previous one.

HTTP/2 under strain as the Internet grows

During the evolution of the Internet and HTTP, the underlying transport mechanism of HTTP has, by-and-large, remained the same. However, as internet traffic has grown following massive public adoption of mobile device technology, HTTP as a protocol has struggled to provide a smooth, transparent web browsing experience. And with increasing demand for realtime applications, HTTP/2’s shortcomings were becoming more and more evident.

For one, the final version of HTTP/2 didn’t include many of the hoped-for improvements in the step-up from HTTP/1.1. In order to remain backward compatible with HTTP/1.1, the protocol had to retain the same POST and GET requests, status codes (200, 301, 404, and 500), and so on.

Also, some of the features that were implemented introduced security issues. Apart from the legacy lack of mandatory encryption, this included compression page headers and cookies that were vulnerable to attack.

The aim of HTTP/3 is to provide fast, reliable, and secure web connections across all forms of devices by straightening out the transport-related issues of HTTP/2. To do this, it uses a different transport layer network protocol called QUIC, which runs over User Datagram Protocol (UDP) internet protocol instead of the TCP used by all previous versions of HTTP.

Unlike TCP’s ordered message exchange scheme, UDP allows multidirectional broadcasting of messages, which feature, among other things, helps address head-of-line blocking (HoL) issues at the packet level.

The difference between message ordering in TCP and UDP. SYN=synchronize; ACK=acknowledge

Additionally, QUIC has redesigned the way handshakes are performed between client and server, reducing the latency associated with establishing repeated connections. Here’s a general idea, we’ll get into more detail in the Limitations of TCP section:

Number of messages to first byte of data on a repeat connection in TCP, TCP+TLS, AND QUIC.

But surely, I hear you say, TCP is a more reliable protocol than UDP; why redesign the transport layer of HTTP over UDP? We’ll look at the limitations of running HTTP over TCP shortly. First, let’s look at what HTTP/3 is, and dive into the design considerations for HTTP/3 based on the QUIC protocol.

The emergence of HTTP/3

While the Internet Engineering Task Force (IETF) formally standardized HTTP/2, Google was independently building gQUIC, a new transport protocol. The initial experiments with gQUIC proved to be very encouraging in enhancing the web browsing experience under poor network conditions.

Support to adopt gQUIC gained momentum and it was renamed QUIC. A majority of IETF members voted to build a new specification for HTTP to run over QUIC (and indeed called it “HTTP over QUIC”). This new initiative came to be known as HTTP/3.

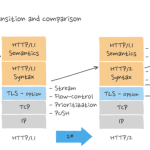

HTTP/3 is similar in syntax and semantically to HTTP/2. HTTP/3 follows the same sequence of request and response message exchanges, with a data format that contains methods, headers, status codes, and body. However, HTTP/3’s significant difference lies in the stacking order of protocol layers on top of UDP, as shown in the following diagram.

Stacking order in HTTP/3 showing QUIC overlapping both the security layer and part of the transport layer.

How HTTP/3 and QUIC solve the limitations of TCP

The advantages of HTTP/3 functionality come from the underlying QUIC protocol. Before we talk about QUIC and UDP it’s worthwhile to list some of the limitations of TCP that led to the development of QUIC in the first place.

Connectionless UDP, as used in QUIC, does not request data resend on error.

Limitations of TCP

TCP can intermittently hang your data transmission

TCP's receiver sliding window does not progress if a segment with a lower sequence number hasn't arrived / been received yet, even if segments with higher number have. This can cause the TCP stream to hang momentarily or even close, even if only one segment failed to arrive. This problem is known as packet-level Head-of-Line (HoL) blocking of the TCP stream.

Packet-level Head-of-Line blocking can cause a TCP stream to close.

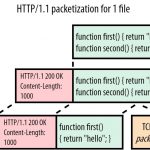

TCP does not support stream level multiplexing

While TCP does allow multiple logical connections to and from the application layer, it doesn’t allow multiplexing of data packets within a single TCP stream. With HTTP/2, the browser can open only one TCP connection with the server. It uses the same connection to request multiple objects, such as CSS, JavaScript, and other files. While receiving these objects, TCP serializes all the objects in the same stream. As a result, it has no idea about the object-level partitioning of TCP segments.

TCP incurs redundant communication

When doing the connection handshake, TCP exchanges a sequence of messages, some of which are redundant if the connection is established with a known host.

TCP+TLS handshake at the start of a communication session that uses TLS encryption. Source: What Happens in a TLS Handshake? | SSL Handshake

A new transport protocol – the QUIC (not dirty) solution

The QUIC protocol addresses these TCP limitations by introducing a few changes in the underlying transmission mechanism. These changes arise from the following design choices:

UDP as the choice for underlying transport layer protocol

A new transport mechanism built over TCP would inherit all the disadvantages of TCP described earlier. An added bonus is QUIC is built at the user level, so it doesn’t require changes in the kernel with every protocol upgrade, paving the road to easier adoption.

Stream multiplexing and flow control

QUIC introduces the notion of multiple streams multiplexed on a single connection. QUIC by design implements separate, per-stream flow control, which solves the problem of head-of-line blocking (HoL) of the entire connection. HTTP/2 had originally addressed only the HTTP-level HoL problem. However, in HTTP/2, packet-level HoL of the TCP stream could still block all transactions on the connection.

By using UDP, there is no packet-level Head-of-Line blocking.

Flexible congestion control

TCP has a rigid congestion control mechanism. Every time the TCP protocol detects congestion, it halves the size of the congestion window. QUIC’s congestion control is more flexible and makes more efficient use of the available network bandwidth, resulting in better traffic throughput.

Better error handling

QUIC proposes to use an enhanced loss-recovery mechanism and forward error correction to deal with erroneous packets in a better way, especially with patchy and slow wireless networks prone to high rates of error in transmission.

Faster handshaking

QUIC uses the same TLS module as HTTP/2 for a secured connection. However, unlike TCP, QUIC’s handshake mechanism is optimized to avoid redundant protocol exchanges when two known peers establish communication with each other.

Setting up a secure connection with TCP and TLS takes at least two Round-Trip Times (RTTs), adding to the latency overhead. With QUIC, setting up the first encrypted connection is 1 RTT, and when the session is resumed, payload data is sent with the first packet, for a minimum of zero RTTs, offering a consistent across the board reduction in overall latency when compared to HTTP/2.

Comparison of numbers of RTT for different protocols between first and subsequent connections. Source: Research Collection.

Syntax and semantics

By stacking the HTTP/3-based application layer over QUIC, you get all the advantages of an enhanced transport mechanism while retaining the same syntax and semantics of HTTP/2. However, note that HTTP/2 cannot be directly integrated with QUIC because the underlying frame mapping from application to transport is incompatible. Consequently, IETF’s HTTP working group suggested HTTP/3 as the new HTTP version with its frame mapping modified as required by the frame format of QUIC protocol.

Compression

HTTP/3 also uses a new header compression mechanism called QPACK, which is a modification of HPACK used in HTTP/2. Under QPACK, the HTTP headers can arrive out of order in different QUIC streams. Unlike in HTTP/2, where TCP ensures in-order delivery of packets, QUIC streams are delivered out of order and may contain different headers in different streams. To deal with this, QPACK uses a lookup table mechanism for encoding and decoding the headers.

Improved server push

Introduced in HTTP/2, the server push feature can be thought of as anticipation of a request yet to be made by the client. This is still present in HTTP/3, but its implementation is through different mechanisms and can be restricted. Although server pushes save half a round trip, they still take up bandwidth.

A PUSH_PROMISE frame is sent from the server over the request stream showing what would be contained in a request that a push would be a response to. It then sends that actual response over a new stream.

Server pushes can be limited by the client. Individual pushed streams can be cancelled with a CANCEL_PUSH, even if previously deemed acceptable.

Why is HTTP/3 Important?

TCP has been around for over four decades. It was initially standardized in 1981 through RFC 793. Over the years, it has undergone updates and has proven to be a very robust transport protocol for supporting the growth of internet traffic. However, by design, TCP was never suitable for handling data transmission over a lossy wireless medium. In the early days of the internet, wired connections were the only connections in existence, linking every networked computer, so this was never a problem.

Now, with more smartphones and portable devices than desktops and laptop computers, more than 50% of internet traffic is delivered over wireless. With this growth, overall web browsing experience has deteriorated as response times have increased. Several factors have caused this, the most important of them being head-of-line blocking, as described here, when wireless coverage is inadequate.

Google’s first tests intended to solve HoL proved that implementing QUIC as the underlying transport protocol for some of the popular Google services greatly improved response speed and user experience. By deploying QUIC as the underlying transport protocol for streaming YouTube videos, Google reported a 30% drop in rebuffering rates, which have a direct impact on the video viewing experience. Similar improvements were seen in displaying Google search results.

These gains were more noticeable when operating under poor network conditions, prompting Google to work more aggressively on refining the protocol, before eventually proposing it to IETF for standardization.

With all the improvements resulting from these early trials, QUIC has emerged as an essential ingredient for taking the web into the future. Evolving from HTTP/2 to HTTP/3, with the support of QUIC is a logical step in this direction.

The best use cases of HTTP/3

The intention with HTTP/3 is to improve the overall web experience, especially in regions where high-speed wireless internet access is still unavailable. Even though HTTP/2 is well suited for some of these applications, HTTP/3 adds value in the following scenarios.

Internet of Things (IoT)

HTTP may not be the preferred protocol for IoT because of its limitations, but there are applications where HTTP-based communication is well-suited. For example, HTTP/3 can address the issues of lossy wireless connection for mobile devices that gather data from attached sensors. This also applies to standalone IoT devices mounted on vehicles or movable assets. Because HTTP/3 has a robust transport layer, access to and from such devices via HTTP is more reliable.

Realtime ad bidding

When an ad is served to web browsers, it is bid on in realtime. Page and user info are sent to an ad exchange, which then auctions them off to the highest-bidding advertiser. All the companies that could serve an ad to a consumer put in bids to be the one to make that impression. It’s an algorithmic competition for space provided by the ad-serving network.

Having a connection that only requires one acknowledgement (a single handshake) massively improves the performance of bidding and allows for it to load more responsively ensuring your page load is not delayed by this flurry of competition. Faster ad loads mean faster page loads.

Microservices

In a microservices mesh, a lot of time is lost going through all the microservices in order to get the data across. HTTP is always the slowest hop. Therefore, the faster you can make each request between each system — in thousandths of a second instead of hundredths — the better the resultant data isolation, and the lower the number of replicate data on your network

QUIC’s strength is the relative lack of handshakes, so you can pass those requests in single-digit milliseconds, and your microservices can be relatively pure, allowing for true single responsibility. The benefit of HTTP/3 here is less about throughput of big data, and more about speeding up each micro-interaction.

Web Virtual Reality (VR)

With ever-improving browser capabilities, the content landscape changes accordingly. One such area of change is web-based VR. Although still in its infancy, there are plenty of situations where VR plays a pivotal role in enhancing collaboration. The web holds center stage in facilitating such VR-rich interactions. VR applications demand more bandwidth to render intricate details of a virtual scene and will likely benefit from migrating to HTTP/3 powered by QUIC.

Getting started with HTTP/3

Software libraries supporting HTTP/3

The HTTP Working Group at IETF has released HTTP/3 as an official standard in August 2020. So it’s not yet officially supported by popular web servers such as NGINX and Apache. However, several software libraries are available to experiment with this new protocol, and unofficial patches are also available.

Here is a list of the popular software libraries that support HTTP/3 and QUIC transport. Note that these implementations are based on one of the Internet draft standard versions, which is likely to be superseded by a higher version leading up to the final standard published in an RFC.

quiche (https://github.com/cloudflare/quiche)

quiche provides a low-level programming interface for sending and receiving packets over QUIC protocol. It also supports an HTTP/3 module for sending HTTP packets over its QUIC protocol implementation. Additionally, it provides an unofficial patch for NGINX servers to install and host a web server capable of running HTTP/3, as well as wrapper support on Android and iOS mobile apps.

Aioquic (https://github.com/aiortc/aioquic)

Aioquic is a pythonic implementation of QUIC. It also supports an inbuilt test server and client library for HTTP/3. Aioquic is built on top of the asyncio module, which is Python’s standard asynchronous I/O framework.

Neqo (https://github.com/mozilla/neqo)

Neqo is Mozilla’s implementation of QUIC and HTTP/3 using Rust.

If you want to play around with QUIC, check out these open source implementations of the QUIC protocol, maintained by the QUIC working group. Considerations and limitation when adopting HTTP/3

Differences in streams and HTTP frame types between HTTP/2 and HTTP/3

HTTP/3 begins from the premise that similarity to HTTP/2 is preferable, but not a hard requirement. HTTP/3 departs from HTTP/2 where QUIC differs from TCP, either to take advantage of QUIC features (like streams) or in its accommodations of important shortcomings of the switch to UDP (such as a lack of total ordering).

HTTP/3’s relationship of requests and responses to streams is similar to HTTP/2’s. However, the details of the HTTP/3 design are substantially different.

Streams

HTTP/3 permits use of a larger number of streams (2^62-1) than HTTP/2. The considerations about exhaustion of stream identifier space apply, though the space is significantly larger such that it is likely that other limits in QUIC are reached first, such as the limit on the connection flow control window.

In contrast to HTTP/2, stream concurrency in HTTP/3 is managed by QUIC. QUIC considers a stream closed when all data has been received and sent data has been acknowledged by the peer.

Due to the presence of other unidirectional stream types, HTTP/3 does not rely exclusively on the number of concurrent unidirectional streams to control the number of concurrent in-flight pushes. Instead, HTTP/3 clients use the MAX_PUSH_ID frame to control the number of pushes received from an HTTP/3 server.

HTTP frame types

Many framing concepts from HTTP/2 can be ignored on QUIC because the transport deals with them. Because frames are already on a stream, they can omit the stream number. Also frames do not block multiplexing (QUIC's multiplexing occurs below this layer), so support for variable-maximum-length packets can be removed. And because stream termination is handled by QUIC, an END_STREAM flag is not required. This permits the removal of the Flags field from the generic frame layout.

Frame payloads in HTTP/3 are largely drawn from HTTP/2. However, since QUIC includes many HTTP/2 features (such as flow control), the HTTP/3 mapping does not re-implement them. As a result, several HTTP/2 frame types are not required in HTTP/3. Where an HTTP/2-defined frame is no longer used, the frame ID has been reserved in order to maximize portability between HTTP/2 and HTTP/3 implementations. However, even equivalent frames between the two mappings are not identical.

Many of the differences arise from the fact that HTTP/2 provides an absolute ordering between frames across all streams, while QUIC provides this guarantee on each stream only. As a result, if a frame type makes assumptions that frames from different streams will still be received in the order sent, HTTP/3 will break them.

HTTP/2 specifies priority assignments in PRIORITY frames and (optionally) in HEADERS frames. HTTP/3 does not provide a means of signalling priority. Note that while there is no explicit signalling for priority, prioritization is still taken care of.

HPACK was designed with the assumption of in-order delivery. A sequence of encoded field sections must arrive (and be decoded) at an endpoint in the same order in which they were encoded. This ensures that the dynamic state at the two endpoints remains in sync. QPACK is HPACK’s equivalent in HTTP/3 over QUIC.

Limitations of HTTP/3

Transitioning to HTTP/3 involves not only a change in the application layer but also a change in the underlying transport layer. Hence adoption of HTTP/3 is a bit more challenging compared to its predecessor HTTP/2, which required only a change in the application layer.

The transport layer undergoes much scrutiny by the middleboxes in the network. These middleboxes, such as firewalls, proxies, NAT devices, perform a lot of deep packet inspection to meet their functional requirements. As a result, the introduction of a new transport mechanism has some ramifications for IT infrastructure and operations teams.

Security services are often built based on the premise that application traffic (HTTP for the most part) will be transported over TCP, the reliability-focused, connection-oriented of the two protocols. The change to UDP could have a significant impact on the ability of security infrastructure to parse and analyse application traffic simply because UDP is a datagram-based (packet) protocol and can be, well, unreliable.

Another problem with UDP is that TCP is currently the protocol of choice for most web traffic, as well as for well-known services defined by IETF. Default packet filtering policies of firewalls may frown upon prolonged UDP sessions running HTTP/3

Another scenario where HTTP/3 might become too cumbersome to adopt is for constrained IoT devices. Many IoT applications deploy low form-factor devices with limited RAM and CPU power. This requirement is enforced to make the devices operate efficiently under constrained conditions, such as battery power, low bit-rate, and lossy connectivity.

HTTP/3’s additional transport layer processing, in the form of QUIC on top of existing UDP, adds to the footprint of the overall protocol stack. It makes HTTP/3 just bulky enough to be unsuitable for those IoT devices. A counterpoint is that such situations are scarce, and specialized protocols such as MQTT exist, getting around the need to support HTTP directly on such devices.

Conclusion

HTTP/3 can be thought of as HTTP semantics transported over QUIC, Google's encrypted-by-default transport protocol that uses congestion control over UDP. Not all features of HTTP/2 can be mapped on top of QUIC. Some HTTP/2 features require order guarantees, and while QUIC can provide that guarantee within a single stream, it can’t offer the same across streams. As such, a new revision of HTTP was required.

Two key stated main goals of QUIC are to resolve packet-level head-of-line blocking and reduce latency in HTTP connections and traffic. QUIC also implements per-stream flow control and a connection ID that persists during network-switch events. Using UDP instead of TCP allows for multiplexing and lightweight connection establishment, improving the experience of end-users on low-quality networks, though with some reservations about quality of service on high-quality networks.

UDP traffic can often be aggressively timed out at the middlebox level, or commonly blocked inside organizations as a precaution against amplification attacks. In a case like this, clients need to have in place a strategy to fall back to TCP.

Hardware and kernel support are still lacking; for example, CPUs bear the brunt of extra stack traversals and datagram hand-offs between user-space and kernel space. While true, this is a chicken-egg problem, and an opportunity for hardware vendors to step up to the plate and create futureproof solutions.

With some changes, the IETF has validated the concept of HTTP-over-QUIC, and renamed it HTTP/3 in order to enshrine the new way of binding HTTP semantics to the wire, and also to disambiguate the concept from the QUIC experiment that continues at Google.

Following the positive results shown by Google’s experiments with QUIC, standardization efforts continue at IETF. However, as of July 2020, HTTP/3 is still at the Internet-Draft stage (with multiple implementations), and has not yet advanced to the RFC stage.

IETF is finalizing work on the standard. All major browsers are testing HTTP/3 with their nightly (experimental) builds. No browsers or servers currently offer official support, and there are no guarantees that it will become the de-facto standard despite heavy resource investment by industry and official bodies. However, while it’s baking, developments in this space are definitely ones to watch.

About Ably

Ably is an enterprise-ready pub/sub messaging platform. We make it easy to efficiently design, quickly ship, and seamlessly scale critical realtime functionality delivered directly to end-users. Everyday we deliver billions of realtime messages to millions of users for thousands of companies.

We power the apps that people, organizations, and enterprises depend on everyday like Lightspeed System’s realtime device management platform for over seven million school-owned devices, Vitac’s live captioning for 100s of millions of multilingual viewers for events like the Olympic Games, and Split’s realtime feature flagging for one trillion feature flags per month.

We’re the only pub/sub platform with a suite of baked-in services to build complete realtime functionality: presence shows a driver’s live GPS location on a home-delivery app, history instantly loads the most recent score when opening a sports app, stream resume automatically handles reconnection when swapping networks, and our integrations extend Ably into third-party clouds and systems like AWS Kinesis and RabbitMQ. With 25+ SDKs we target every major platform across web, mobile, and IoT.

Our platform is mathematically modeled around Four Pillars of Dependability so we’re able to ensure messages don’t get lost while still being delivered at low latency over a secure, reliable, and highly available global edge network.

Developers from startups to industrial giants choose to build on Ably because they simplify engineering, minimize DevOps overhead, and increase development velocity.

Further reading and resources

See the Ably topics:

See also:

-

Further background on QPACK and HPACK: The Road to QUIC

via https://ably.com/topic/http3

![HTTP[快问快答系列]](https://blog.p2hp.com/wp-content/uploads/2023/05/71IkT8X1FR-150x150.png)

![一文串联 HTTP / [0.9 | 1.0 | 1.1 | 2 | 3]](https://blog.p2hp.com/wp-content/plugins/wordpress-23-related-posts-plugin/static/thumbs/14.jpg)